Designing the best floorplan in physical design is a crucial step in integrated circuit (IC) design, as it can significantly impact the overall performance, power consumption, and manufacturability of the chip. A floorplan determines the placement of various functional blocks, standard cells, and other components on the chip's silicon area. Here are the key considerations and steps involved in deciding the best floorplan:

1. Block Placement:

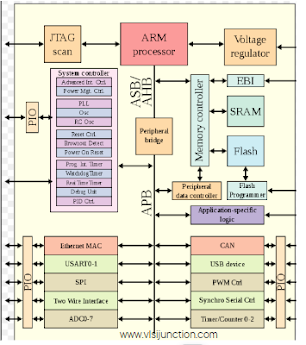

- Identify the different functional blocks, such as CPUs, memory, I/O, and custom blocks, that need to be placed on the chip.

- Consider the block sizes, aspect ratios, and power/thermal requirements of each block.

2. Hierarchy and Partitioning:

- Determine if a hierarchical floorplan is necessary, where blocks are grouped and placed at different levels of the hierarchy.

- Partition the design into logical regions or modules based on functional requirements.

3. Power and Signal Integrity:

- Place power-hungry blocks and components near the power supply to minimize voltage drop.

- Place critical blocks closer to I/O interfaces to reduce signal propagation delays and ensure signal integrity.

4. Clock Tree:

- Plan the clock tree distribution network and place clock sources (oscillators, PLLs) strategically to minimize clock skew and power consumption.

5. Noise and Interference:

- Consider minimizing the coupling of noisy blocks (e.g., clock generators) with sensitive analog or RF blocks.

- Place noisy or high-frequency blocks away from critical signal paths to avoid interference.

6. Routing and Wirelength:

- Plan the floorplan to minimize wirelength between blocks, which reduces signal delay and power consumption.

- Arrange blocks such that the interconnects between them are short and manageable.

7. Heat Dissipation:

- Distribute heat-generating blocks to avoid localized hotspots. Place power-hungry blocks near heat sinks or cooling mechanisms.

8. Symmetry and Regularity:

- Use symmetry and regularity in floorplan design to simplify routing and achieve balanced performance across different regions.

9. Design Rules and DRC:

- Adhere to design rules and design rule checks (DRC) to ensure manufacturability. Place blocks considering metal pitch, spacing, and other lithographic constraints.

10. Iterative Refinement:

- Floorplanning is often an iterative process. Use floorplanning tools to experiment with different arrangements and assess the impact on performance metrics.

11. Tool-Driven Optimization:

- Utilize advanced floorplanning tools that employ optimization algorithms to automatically generate or refine floorplans based on specified objectives.

12. Trade-offs and Metrics:

- Define design objectives and metrics such as performance, power, area, and signal integrity. Make trade-offs between these metrics to achieve the best compromise.

13. Consulting Experts:

- Collaborate with experienced physical design engineers, as their insights can help you make informed decisions based on the specifics of the design.